You're reading the Keybase blog.

There are more posts.

When you're done, you can install Keybase.

The Horror of a 'Secure Golden Key'

This week, the Washington Post's editorial board, in a widely circulated call for “compromise” on encryption, proposed that while our data should be off-limits to hackers and other bad actors, “perhaps Apple and Google could invent a kind of secure golden key” so that the good guys could get to it if necessary.

This theoretical “secure golden key” would protect privacy while allowing privileged access in cases of legal or state-security emergency. Kidnappers and terrorists are exposed, and the rest of us are safe. Sounds nice. But this proposal is nonsense, and, given the sensitivity of the issue, highly dangerous. Here’s why.

A “golden key” is just another, more pleasant, word for a backdoor—something that allows people access to your data without going through you directly. This backdoor would, by design, allow Apple and Google to view your password-protected files if they received a subpoena or some other government directive. You'd pick your own password for when you needed your data, but the companies would also get one, of their choosing. With it, they could open any of your docs: your photos, your messages, your diary, whatever.

The Post assumes that a “secure key” means hackers, foreign governments, and curious employees could never break into this system. They also assume it would be immune to bugs. They envision a magic tool that only the righteous may wield. Does this sound familiar?

Practically speaking, the Washington Post has proposed the impossible. If Apple, Google and Uncle Sam hold keys to your documents, you will be at great risk.

In case you're not a criminal

Perhaps the reason the WaPo is so confused is that FBI Director James Comey has told the media that Apple's anti-backdoor stance only protects criminals. Unfortunately he's not seeing beyond his own job, and WaPo didn't look much further.

Apple’s anti-backdoor policy aims to protect everyone. The following is a list of real threats their policy would thwart. Not threats to terrorists or kidnappers, but to 300 million Americans and 7 billion humans who are moving their intimate documents into the cloud. Make no mistake, what Apple and Google are proposing protects you.

Whether you're a regular, honest person, or a US legislator trying to understand this issue, understand this list.

Threat #1. It Protects You From Hackers

If Apple has the key to unlock your data legally, that can also be used illegally, without Apple's cooperation. Home Depot and Target? They were recently hacked to the tune of 100 million accounts.

Despite great financial and legal incentive to keep your data safe, they could not.

But finance is mostly boring. Other digital documents are very, very personal.

So hackers have (1) stolen everyone's credit cards, and (2) stolen celebrities' personal pictures. Up next: your personal pics, videos, docs, messages, medical data, and diary. With the Washington Post's proposal, it will all be leaked, a kind of secure golden shower.

There is some hope. If your data were locked with a strong password that only you knew, only on your device, then the best hackers could get nothing by hacking Apple's data servers. They’d look for your pictures but find an unintelligible pile of goops instead.

To begin to protect yourself, you need the legal right to a real, working password that only you know.

Threat #2. It Protects You From Foreign-government breaches

As it stands: anyone could be inside Apple, Google, and Microsoft, quietly collecting data, building dossiers on anyone in the world, harnessing the system normally used to answer "lawful" warrant requests. This is a different kind of risk from what we've seen with Home Depot and Target, because we can't see how often it's happening.

Even if you trust the U.S. government to act in your best interest (say, by foiling terrorists), do you trust all governments? If a door is open to one organization, it is open to all.

Again, this can only be solved with a real, working password that only you know.

Threat #3. It Protects You From Human Error

Did you know: On June 20, 2011 Dropbox let anyone on the site login as any other user? On that day, anyone could read or download anyone else's documents. Will this happen again? Can laws against data leaks protect us? Of course not. Laws, policy, even honest, well-meaning effort can't prevent human error. It's inevitable.

When you host your data and your keys "in the cloud", your data is only as strong as the weakest programmer who has access.

On a technical tangent, a proposed solution to this -- and threats 1 & 2 -- involves your device having half of a key, so a bug wouldn't expose your data to anyone, unless they also got your device. (Security on iOS7 worked this way.) This failed for users because phones, computers, and tablets are thrown away, shared, sent in for service, refurbished, and recycled. Old devices are everywhere and easy to acquire. Apple recognized this and fixed it in iOS8.

You must be allowed to throw away your data without hunting down every device you've ever used.

The only solution is a real, single password that only you know.

Threat #4. It Protects You From the future

This is the greatest threat of all.

Our cloud data is stored for eternity, not the moment. Legislation and company policy cannot guarantee backups are destroyed. Our government may change, and what qualifies as a "lawful" warrant tomorrow might be illegal today. Similarly, your eternal data might be legal today and a threat tomorrow.

What you consider cool today might be an embarrassment or personal risk tomorrow. A photo you can rip to pieces, a letter you can shred, a diary you can burn, an old flag you can take out into the woods with your friends and shoot with a bb-gun till it's destroyed and then have a nice, cold beer to celebrate. Cheers to that.

But memories in the cloud are there forever. You will never be able to destroy them. That data is backed-up, distributed, redundant, and permanent. I can tell you first-hand: do not assume that when you click "delete" a file is gone. Take Mary Winstead’s word for it. Bugs and tape backups often keep things around, regardless of the law or programmer effort. This is one of the single hairiest technical problems of today.

Instead, how can you burn that digital love letter, or tear up that digital picture? The only answer is to start with it encrypted, and then throw away the only key.

You need the legal right to use software that makes you the sole owner of that key.

~ ~ ~ ~ ~ ~ ~ ~ ~

The above are all practical threats to good people. Still, even if you're sitting back feeling immune to embarrassment, hackers, foreign governments, bugs, dystopias, and disgruntled employees, there are still deep, philosophical, human considerations.

Consideration #1 - The invasion of personal space should be detectable

Even if you have nothing to hide in your home, you'd like to know if it's been entered.

In general, when your personal space is invaded, you want to know. Historically, this was easy. You had neighbors who could watch your doors, maybe some cameras, maybe an alarm system. You licked your envelopes. An intruder - legal or not - was someone you could hope to catch.

Therefore - in the absence of a breach - you could believe your home was not entered by the police or a criminal. This felt good. It even made you like your government.

When Apple built iOS8, they took the stance that your data qualifies as personal space. Even if you host it in the cloud. For someone to break in, they have to come through you.

Consideration #2 - Our cloud data is becoming an extension of our minds.

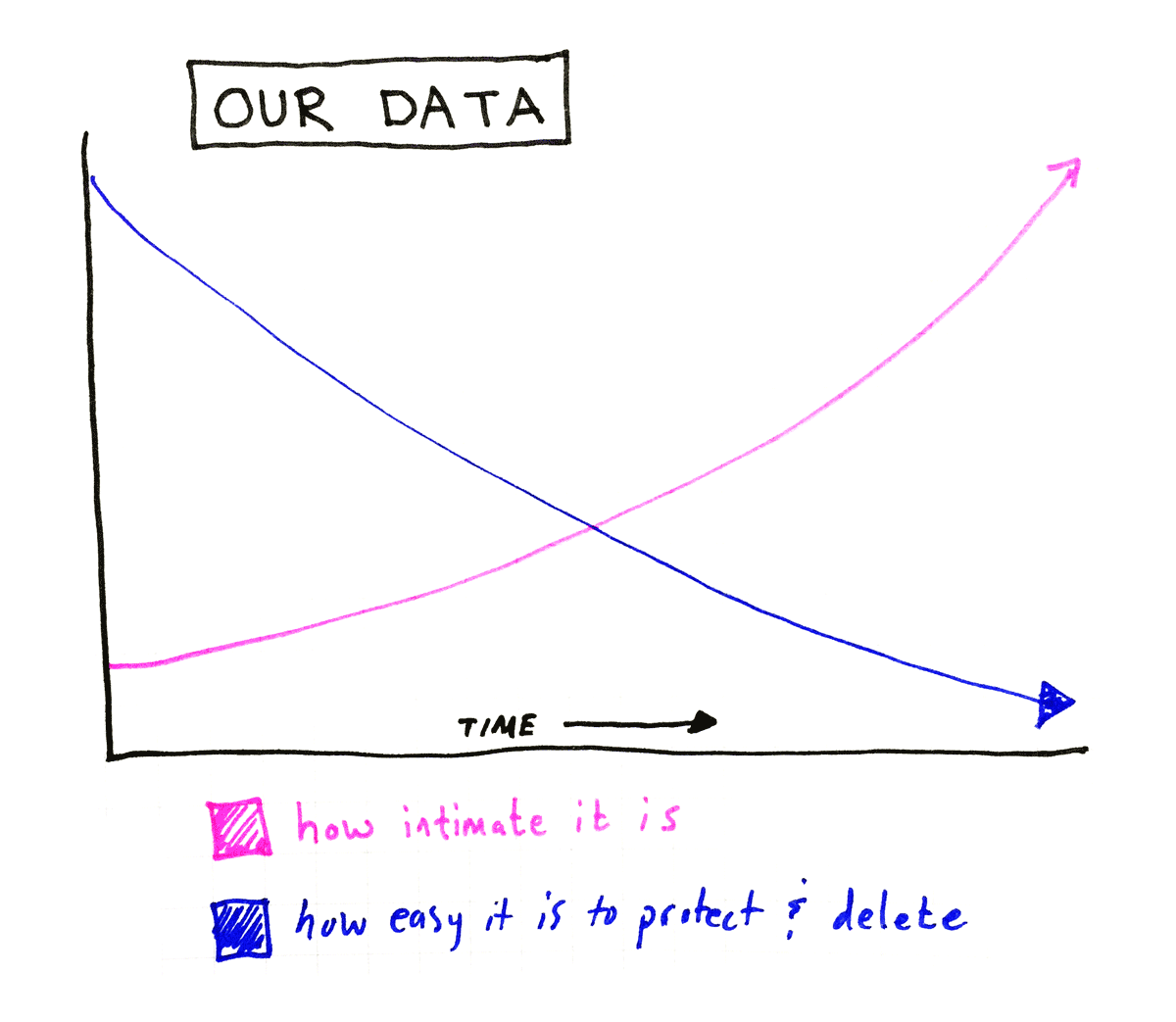

Beyond all the technical considerations, there is a sea change in what we are digitizing.

We whisper “I love you” through the cloud. We have pictures of our kids in the bath tub. Our teens are sexting. We fight with our friends. We talk shit about the government. We embarrass ourselves. We watch our babies on cloud cameras. We take pictures of our funny moles. We ask Google things we might not even ask our doctor.

Even our passing thoughts and fears are going onto our devices.

Time was, all these things we said in passing were ephemeral. We could conveniently pretend to forget. Or actually forget. Thanks to the way our lives have changed, we no longer have that option.

This phenomenon is accelerating. In 10 years, our glasses may see what we see, hear what we hear. Our watches and implants and security systems of tomorrow may know when we have fevers, when we're stressed out, when our hearts are pounding, when we have sex and - wow - who's in the room with us, and who's on top and what direction they're facing*. Google and Apple and their successors will host all this data.

We're not talking about documents anymore: we're talking about everything.

You should be allowed to forget some of it. And to protect it from all the dangers mentioned above.

You should want all this intimate data password-protected, with a single key only you know. You should hope that Google, Apple, and Microsoft all support this decision. More important, you should hope that the government legally allows them and even encourages them to make this decision. It's a hard enough technical problem. Let's not make it a legal one.

In conclusion

Is Apple's solution correct? I don't know. It needs to be studied. But either way, they should be allowed to try. They should be allowed to make software with no backdoor.

Is the Washington Post's "secure golden key" a good idea? No it isn't. Whether it's legally enforced or voluntary, it's a misguided, dangerous proposal. It will become more dangerous with time.

Honest, good people are endangered by any backdoor that bypasses their own passwords.

-Chris Coyne (comments welcome )

Thanks to Max Krohn, Alexis Ohanian, and Tim Bray for reading drafts.

Super big thanks to Christian Rudder for a crapload of edits.

* HR + GPS + accelerometer + compass on each partner

This is a post on the Keybase blog.

- Keybase joins Zoom

- New Cryptographic Tools on Keybase

- Introducing Keybase bots

- Dealing with Spam on Keybase

- Keybase SSH

- Stellar wallets for all Keybase users

- Keybase ♥'s Mastodon, and how to get your site on Keybase

- Keybase is not softer than TOFU

- Cryptographic coin flipping, now in Keybase

- Keybase exploding messages and forward secrecy

- Keybase is now supported by the Stellar Development Foundation

- New Teams Features

- Keybase launches encrypted git

- Introducing Keybase Teams

- Abrupt Termination of Coinbase Support

- Introducing Keybase Chat

- Keybase chooses Zcash

- Keybase Filesystem Documents

- Keybase's New Key Model

- Keybase raises $10.8M

- Error Handling in JavaScript